Introduction

With the publication of CXL Specification 1.0, the Compute Express Link (CXL) technology that was first introduced in March 2019 swiftly gained popularity in the High-Performance Computing (HPC) and Enterprise Cloud sectors.

The most recent standard for connection between computing devices and data centers is called Compute Express Link (CXL).

It offers rapid, low-latency connections that let data centers interface with computing devices quickly, cutting down on latency and removing bottlenecks.

It is a cutting-edge technology that has the potential to greatly enhance data centers’ productivity and effectiveness as well as give applications and services a better level of dependability and scalability.

This glossary contains everything you need to know about Compute Express Link.

What is a Compute Express Link?

Compute Express Link (CXL) is an open standard connection based on PCIe that maintains a unified memory space for rapid communication between an attached accelerator device and the CPU, also known as the host processor.

Compute Express Link is an industry-supported Cache-Coherent Interconnect for Processors, Memory Expansion, and Accelerators.

CXL provides a high-speed, low-latency interconnect that facilitates effective communication between various components of a computing system.

It focused mainly on linking accelerators to the memory subsystem and the main processor (such as a CPU).

How does compute express link work?

The CPU communicates with the accelerator device via the CXL interface when it has to perform a task that is best left to an accelerator device.

The accelerator then accesses the required information from memory or storage, completes the process, and transmits the outcomes to the CPU over the CXL interface.

The CXL interface can be used in the case of a virtual machine (VM) to offload duties from the CPU to an accelerator device designated for the VM.

Using the CXL interface, the accelerator device interacts with the virtual machine, conducting operations and exchanging data as required.

Over the CXL interface, the CPU connects with the accelerator device, performing tasks and exchanging data as necessary. The accelerator device similarly talks with containers.

The CXL interface offers a quick, effective mechanism for the CPU, accelerator hardware, virtual machines, and containers to exchange data and offload duties in all circumstances, improving system performance and cutting latency.

Why CXL is needed?

Data centers must adapt to accommodate more sophisticated and demanding workloads as the amount of available data grows.

The decades-old server architecture is being changed to enable high-performance computing systems to handle the enormous amounts of data produced by AI/ML applications.

Here is where CXL steps in: It provides effective resource sharing/pooling for improved performance, minimize the demand for intricate software, and decreases total system costs.

CXL Benefits

CXL has a variety of benefits for both business and data center operators, including:

- Offering low-latency connectivity and memory coherency to enhance performance and reduce costs.

- enabling more capacity and bandwidth than what can be accommodated by Memory slots

- Adding more memory to a CPU host processor through a CXL-attached device

- Allowing the CPU to use additional memory in conjunction with DRAM memory

CXL Specification

CXL Standard 1.0 based on PCIe 5.0 was released on March 11, 2019. With a cache-coherent protocol, it enables the host CPU to access shared memory on accelerator devices. In June 2019, CXL Standard 1.1 was released.

The CXL Standard 2.0 was issued on November 10, 2020.

In addition to implementing device integrity and data encryption, the new version adds support for CXL switching, enabling the connection of multiple CXL 1. x and 2.0 devices to a CXL 2.0 host processor as well as pooling each device to multiple host processors in distributed shared memory and disaggregated storage configurations.

The PCIe 5.0 PHY is still used by CXL 2.0, hence there is no bandwidth gain over CXL 1. x.

The CXL Specification 3.0, based on the PCIe 6.0 physical interface and PAM-4 coding with twice the bandwidth, was released on August 2, 2022.

Its new features include Fabrication capabilities with multi-level switching and multiple device types per port, as well as improved coherency with peer-to-peer DMA and memory sharing.

CXL Consortium

A brand-new, high-speed CPU-to-device and CPU-to-memory interconnect called Compute Express Link (CXL) was created to boost the performance of next-generation data centers.

Incorporated in Q3 of 2019, the CXL Consortium was established in early 2019. By maintaining memory coherency between the memory of associated devices and the CPU, CXL technology enables resource sharing for improved performance, a more straightforward software stack, and cheaper system costs.

Thanks to this, users may now simply concentrate on their intended workloads rather than the redundant memory management hardware in their accelerators.

The CXL Consortium is an open industry standard organization created to create technical specifications that promote an open ecosystem for data center accelerators and other high-speed improvements while enabling breakthrough performance for new usage models.

CXL standards and protocols

The Compute Express Link (CXL) standard supports a variety of use cases via three protocols: CXL.io, CXL.cache, and CXL.memory.

CXL.io: This protocol uses PCIe’s widespread industry acceptance and familiarity and is functionally equivalent to the PCIe 5.0 protocol. CXL.io, the fundamental communication protocol, is adaptable and covers a variety of use cases.

CXL.cache: Accelerators can efficiently access and cache host memory using this protocol, which was created for more specialized applications to achieve optimal performance.

CXL.memory: Using load/store commands, this protocol enables a host, such as a CPU, to access device-attached memory.

Together, these three protocols make it possible for computer components, such as a CPU host and an AI accelerator, to share memory resources coherently. In essence, this facilitates communication through shared memory, which simplifies programming.

While CXL.io has its link and transaction layer, CXL.cache and CXL.mem are combined and share a common link and transaction layer.

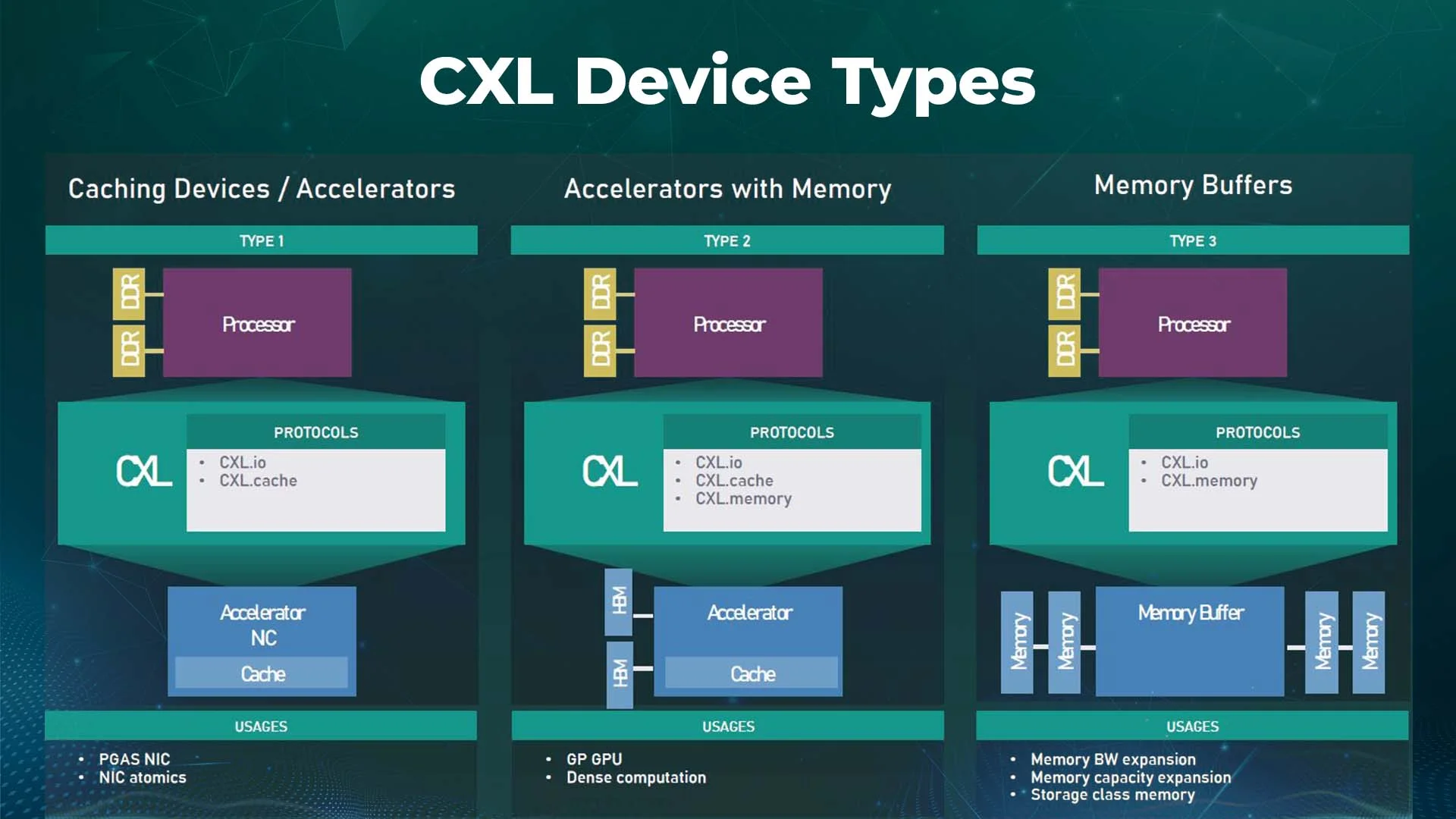

CXL device types

The Compute Express Link Consortium refers to the first as a Type 1 device, consisting of accelerators without host CPU memory.

This type of device uses the CXL.io protocol, which is required, and CXL.cache to communicate with the host processor’s DDR memory capacity as if it were it is own.

One possible example is a smart network interface card that could benefit from caching.

GPUs, ASICs, and FPGAs are Type 2 devices. Each requires a Compute Express Link. Memory protocol and Compute Express Link .io and CXLcache protocol and has its DDR or High Bandwidth CXL Memory devices.

When all three protocols are active, the memory pooling of the host processor and the accelerator are both made locally accessible to the CPU.

Additionally, they share a cache coherence domain, which significantly benefits heterogeneous workloads.

The final Type 3 device use case made possible by the Compute Express Link.io and CXL.memory protocols is memory expansion.

When performing high-performance applications, a buffer connected to the CXL bus could be utilized to increase memory-sharing bandwidth, add persistent memory, or expand DRAM capacity without taking up valuable DRAM slots.

Through CXL specification, high-speed, low-latency storage devices that would have previously replaced DRAM can now complement it, making add-in card, and EDSFF form factors available for non-volatile technologies.

Let’s examine several CXL device types and the particular CXL interconnect verification issues, such as preserving cache coherency between a host CPU and an accelerator.

Type #1. CXL Device:

- Implement a fully coherent cache but no host-managed device memory

- Extends PCIe protocol capability (for example, Atomic operation)

- We may need to implement a custom-ordering model

- Applicable transaction types: D2H coherent and H2D snoop transactions

Type #2. CXL Device:

- Implement an optional coherent cache and host-managed device memory

- Typical applications are devices that have high-bandwidth memories attached

- Applicable transaction types: All CXL.cache/mem transactions

Type #3. CXL Device:

- Only has CXL host-managed device memory.

- The typical application is a memory expander for the host

- Applicable transaction types: CXL.mem MemRd and MemWr transactions

To know more about CXL Device types, check out our blog by clicking here: https://www.logic-fruit.com/blog/cxl/compute-express-link-cxl-device-types/

Generations of CXL

CXL generations are growing continuously. Now we have CXL 1.1, CXL 2.0, and CXL 3.0 and it continues to grow…

Because CXL is closely tied to PCIe, new versions of CXL depend on new versions of PCIe, with about a two-year gap between releases of PCIe and an even longer gap between the release of new specifications and products coming to market.

Now, it’s time to take a closer look at CXL 2.0 and CXL 3.0:

What is CXL 2.0

CXL has been one of the more intriguing connection standards in recent months.

Built on top of a PCIe physical basis, CXL is a connectivity standard intended to manage considerably more than PCIe can.

In addition to serving as data transmission between hosts and devices, CXL has three more branches to support: IO, Cache, and Memory.

These three constitute the core of a novel method of connecting a host with a device, as defined in the CXL 1.0 and 1.1 standards. The updated CXL 2.0 standard advances it.

There are no bandwidth or latency upgrades in CXL 2.0 because it is still based on the same PCIe 5.0 physical standard, but it does include certain much-needed PCIe-specific features that users are accustomed to.

The same CXL.io, CXL.cache, and CXL.memory intrinsics—which deal with how data is handled and in what context—are at the heart of CXL 2.0; however, switching capabilities, more encryption, and support for permanent memory have been introduced.

CXL 2.0 Features and Benefits

Memory Pooling:

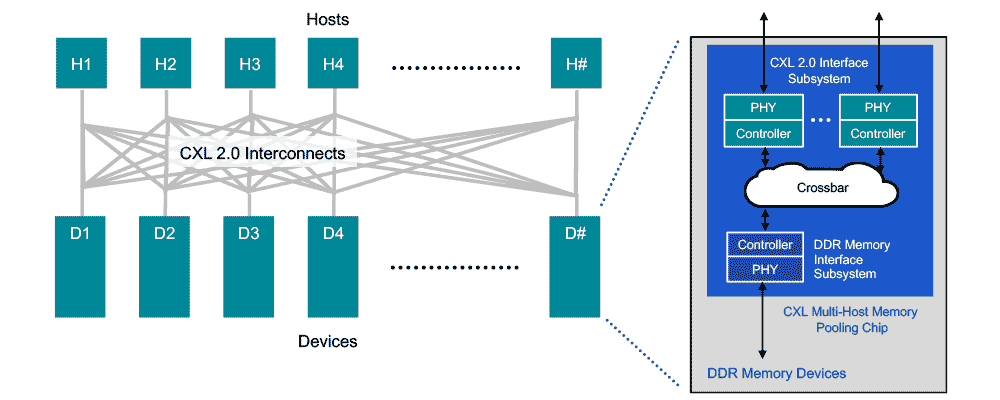

Switching is supported by CXL 2.0 to enable memory pooling. A host can access one or more devices from the pool via a CXL 2.0 switch.

Although the hosts must support CXL 2.0 to take advantage of this feature, a combination of CXL 1.0, 1.1, and 2.0-enabled hardware can be used in the memory devices.

A device can only function as a single logical device accessible by one host at a time under version 1.0/1.1.

However, a 2.0-level device can be divided into numerous logical devices, enabling up to 16 hosts to simultaneously access various parts of the memory.

To precisely match the memory needs of its workload to the available capacity in the memory pool, a host 1 (H1), for instance, can use half the memory in device 1 (D1) and a quarter of the memory in device 2 (D2).

A maximum of 16 hosts can use the remaining space in devices D1 and D2 by one or more of the other hosts. Only one host may use Devices D3 and D4, which are CXL 1.0 and 1.1 compatible, respectively.

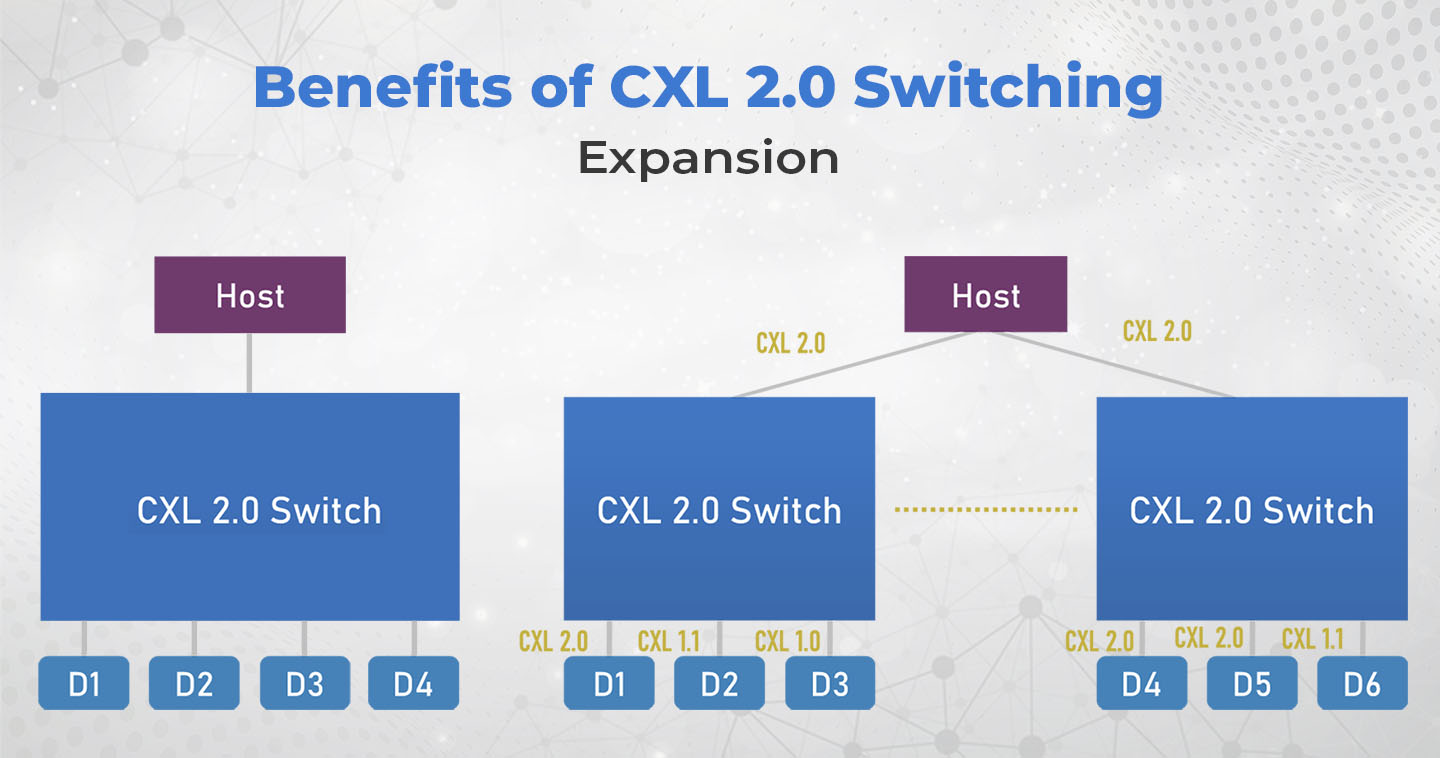

CXL 2.0 Switching:

Users unaware of PCIe switches should know that they connect to a host processor with a certain number of lanes, such as eight or sixteen lanes, and then support a significant number of additional lanes downstream to enhance the number of supported devices.

A typical PCIe switch, for instance, would have 16x lanes for the CPU connection but 48 PCIe lanes downstream to support six linked GPUs at x8 each.

Although there is an upstream bottleneck, switching is the best option for workloads that depend on GPU-to-GPU transmission, especially on systems with constrained CPU lanes.

Compute Express Link 2.0 now supports the switching standard.

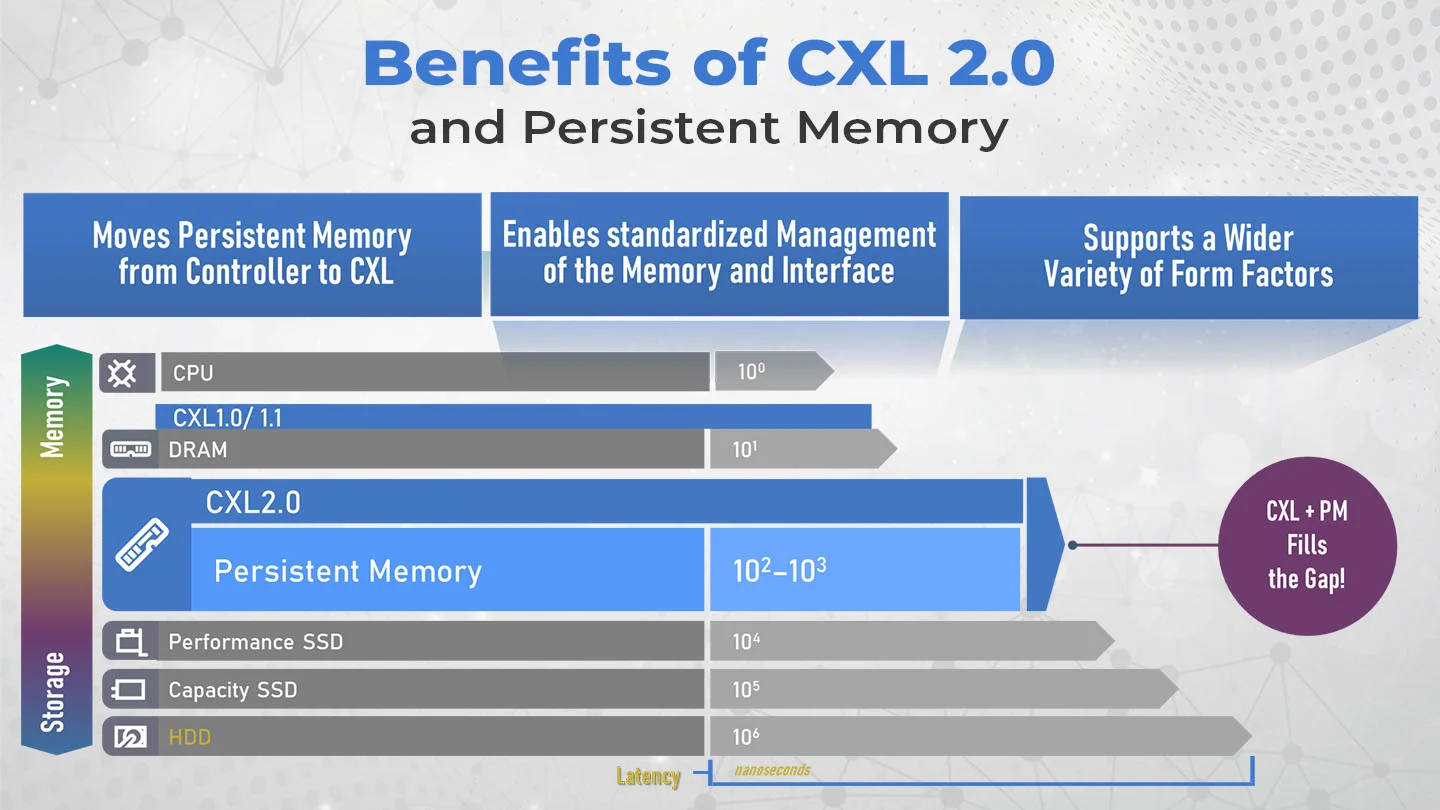

CXL 2.0 Persistent Memory:

Persistent memory, nearly as quick as DRAM yet stores data like NAND, is a development in enterprise computing that has occurred in recent years.

It has long been unclear whether such memory would operate as slow high-capacity memory through a DRAM interface or as compact, quick storage through a storage-like interface.

The CXL.memory standard of the original CXL standards did not directly provide persistent memory unless it already had a device connected. But this time, CXL 2.0 offers additional PMEM support due to several gathered resources.

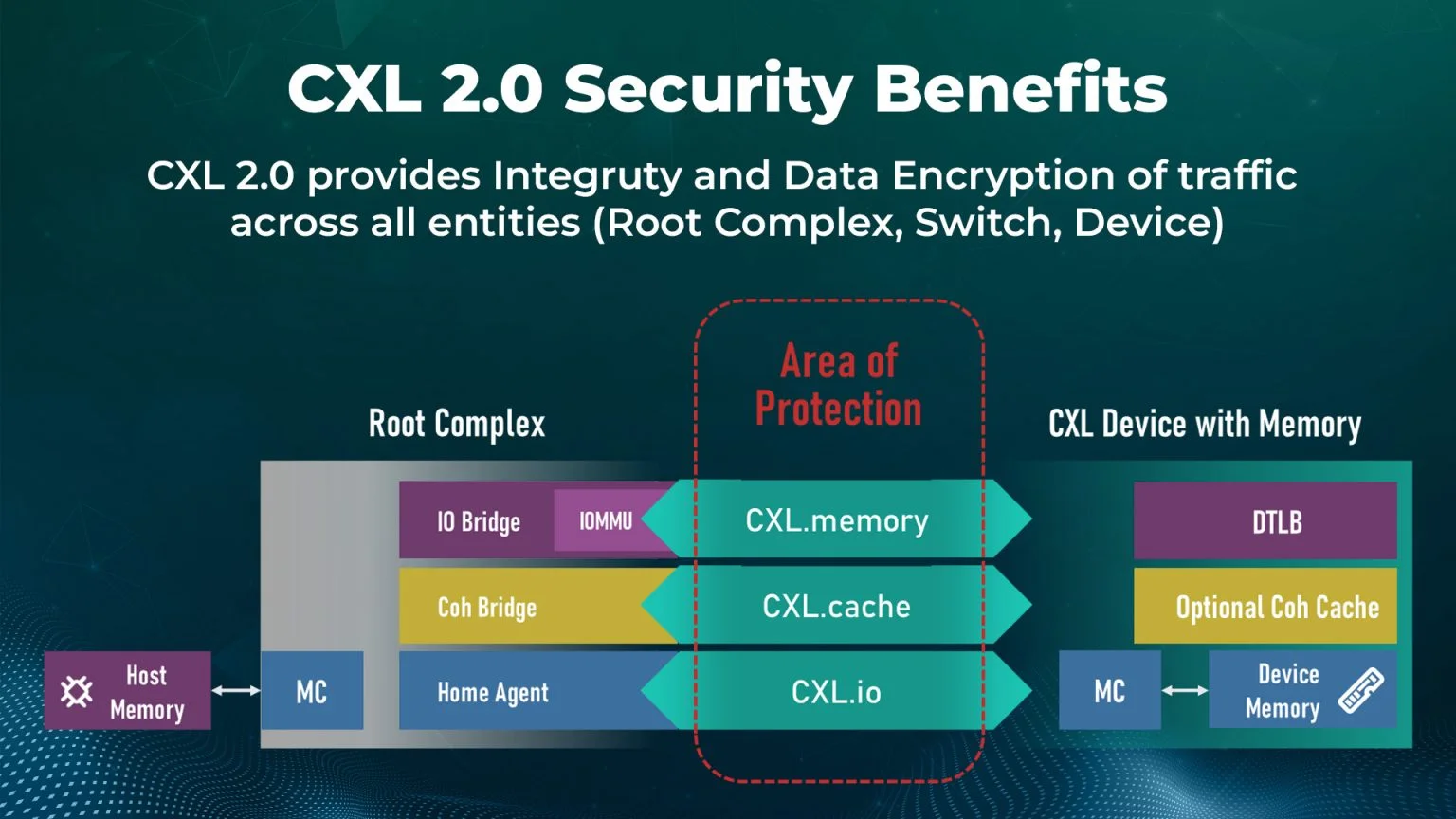

CXL 2.0 Security

Point-to-point security for any CXL link is the final but, in some eyes, most significant feature improvement.

The hardware acceleration present in CXL controllers now permits any-to-any communication encryption, which is supported by the CXL 2.0 standard.

This is an optional component of the standard, meaning that silicon providers do not have to build it in, or if they do, it can be enabled or disabled.

CXL 2.0 specification

With full backward compatibility with CXL 1.1 and 1.0, the CXL 2.0 Specification 1.1 preserves industry investments while adding support for switching for fan-out to connect to more devices, memory pooling to increase memory utilization efficiency, and providing memory capacity on demand, and support for persistent memory.

Key Highlights of the CXL 2.0 Specification:

- Adds support for switching to enable resource migration, memory scaling, and device fanout.

- Support for memory pooling is provided to increase memory consumption and reduce or eliminate the need for overprovisioning memory.

- Provides confidentiality, integrity, and replay protection for data transiting the CXL link by adding link-level Integrity and Data Encryption (CXL IDE).

To know more about Compute Express Link, check out our blog by clicking here: https://www.logic-fruit.com/blog/cxl/compute-express-link-cxl/

What is CXL 3.0

Processors, storage, networking, and other accelerators can all be pooled and dynamically addressed by various hosts and accelerators in CXL 3.0, further decomposing the architecture of a server. This is similar to how CXL 2.0 handles memory.

Moreover, CXL 3.0 supports direct communication between components/devices across a switch or switch fabric. For instance, two GPUs might communicate with one another without using the host CPU or memory or the network.

Highlights of the CXL 3.0 specification:

- Fabric capabilities

- Multi-head and Fabric Attached Devices

- Enhanced fabric management

- Composable disaggregated infrastructure

- Better scalability and improved resource utilization

- Enhanced memory pooling

- Multi-level switching

- New enhanced coherency capabilities

- Improved software capabilities

- Double the bandwidth to 64 GTs

- Zero added latency over CXL 2.0

- Full backward compatibility with CXL 2.0, CXL 1.1, and CXL 1.0

Features of CXL 3.0

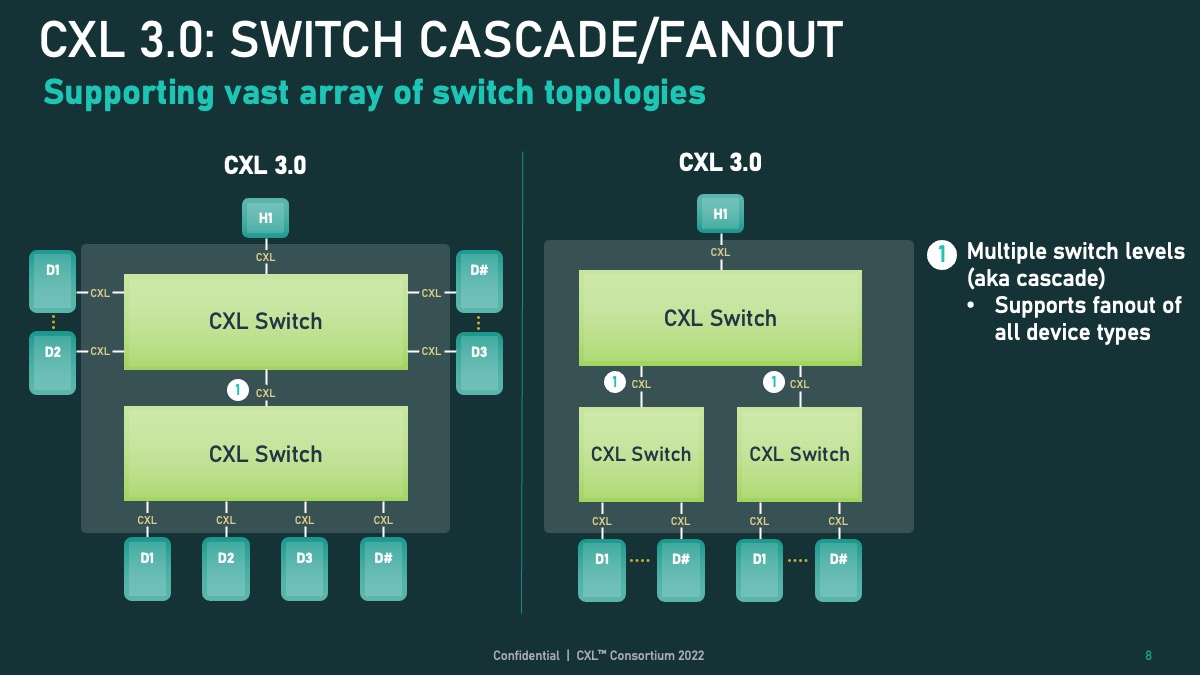

CXL 3.0 switch and fanout capability

The new CXL switch and fanout functionality are one of CXL 3.0’s key features. Switching was added to CXL 2.0, enabling numerous hosts and devices to be on a single level of CXL switches.

The CXL topology can now support many switch layers thanks to CXL 3.0. More devices can be added, and each EDSFF shelf can have a CXL switch in addition to a top-of-rack CXL switch that connects hosts.

CXL 3.0 device-to-device communications

CXL also adds P2P devices to device communications. P2P allows devices to communicate directly without needing to communicate through a host.

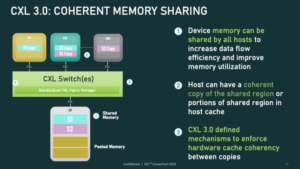

CXL 3.0 Coherent Memory Sharing

With CXL 3.0, coherent memory sharing is now supported. This is significant because it enables systems to share memory in ways other than CXL 2.0, which only allows for the partitioning of memory devices among various hosts and accelerators.

In contrast, CXL permits all hosts in the coherency domain to share memory. As a result, memory is used more effectively. Consider a situation where numerous hosts or accelerators can access the same data source.

Coherently sharing that memory is a considerably more difficult task, but it also promotes efficiency.

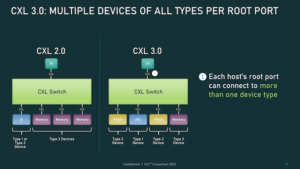

CXL 3.0: Multiple devices of all types per root port

The prior restrictions on the number of Type-1/Type-2 devices that might be connected downstream of a single CXL root port are eliminated in CXL 3.0. CXL 3.0 completely removes those restrictions, whereas CXL 2.0 only permitted one of these processing devices to be present downstream of a root port.

Depending on the objectives of the system builder, a CXL root port can now enable a full mix-and-match setup of Type-1/2/3 devices.

This notably entails increasing density (more accelerators per host) and the utility of the new peer-to-peer transfer features by enabling the attachment of several accelerators to a single switch.

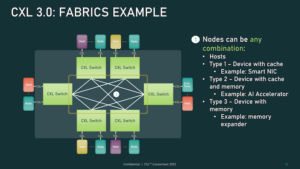

CXL 3.0: Fabrics Example

This versatility allows for non-tree topologies like rings, meshes, and other fabric structures even with only two layers of switches. There are no constraints on the types of individual nodes, and they can be hosts or devices.

CXL 3.0: Fabrics example Use cases

CXL 3.0 can even handle spine/leaf designs, where traffic is routed through top-level spine nodes whose sole purpose is to further route traffic back to lower-level (leaf) nodes that in turn contain actual hosts/devices, allowing truly unusual configurations.

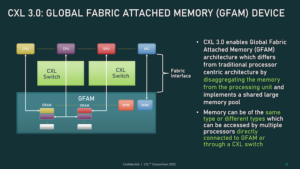

CXL 3.0: Global Fabric Attached Memory

Finally, what the CXL Consortium refers to as Global Fabric Attached Memory enables the usage of this new memory, topology, and fabric features (GFAM).

In a nutshell, GFAM advances CXL’s memory expansion board (Type-3) concept by further decomposing memory from a specific host.

In that sense, a GFAM device is essentially its shared memory pool that hosts and other devices can access as needed. Additionally, both volatile and non-volatile memory, such as DRAM and flash memory, can be combined in a GFAM device.

How does CXL 3.0 work?

The most recent CXL standard, CXL 3.0, offers several upgrades over earlier iterations. Most significantly, a new mode called Hybrid Mode that combines the best elements of batch and real-time methods has been introduced. Other improvements included in CXL 3.0 include support for larger datasets, enhanced performance, and more.

Advantages of CXL 3.0

Unlike other PCIe technologies, CXL 3.0 has several advantages. Direct memory access across peers is among the most intriguing characteristics (P2P DMA).

With the help of this functionality, many hosts can share the same memory space and resources. As a result, the use of model flexibility and scalability may also improve, along with performance.

Support for faster speeds and increased power efficiency, which results in better resource use and improved performance, is another advantage of CXL 3.0.

Additionally, CXL 3.0 makes it possible for devices to interact more quickly with one another, which may boost system throughput.

How LFT can help with CXL

LFT helps CXL in various ways, some of which are listed below:

- LFT has a proven track record on PCIe/CXL Physical Layer and thus, can provide support in the Physical layer.

- LFT can provide support in architecting the various solutions of CXL.

- LFT will release a CXL IP during the first quarter of 2023.

Conclusion:

In order for data centers to handle the complex and demanding workloads brought on by the growing availability of data, CXL is essential.

The numerous CXL devices and protocols all cooperate to improve bidirectional communication and lower latency, which is a crucial element of cross-domain solutions.

This increases situational awareness and shortens response times at the tactical edge in a military context by ensuring that crucial intelligence is delivered safely and quickly.

CXL assists in enhancing performance while lowering the total cost of ownership in real-time through more effective resource sharing—specifically, memory—a simpler computational architecture, interconnectivity between components, and stringent security measures.

![Advanced Driver Assistance System [ADAS] Everything You Needs to Know](https://www.logic-fruit.com/wp-content/uploads/2022/10/Advanced-driver-assistance-systems-Thumbnail.jpg)