Introduction to Compute Express Link:

The most popular method for tying host CPUs to accelerator devices recently is PCI Express. It is an enterprise, desktop, and embedded application-specific high-performance, industry-standard serial I/O interface.

PCIe is constrained in a system with several high-bandwidth devices and sizable shared memory pools. Each PCIe hierarchy uses a single 64-bit address space or 32-bit address.

Therefore, memory pools cannot be maintained. PCIe links have latency to manage shared memory across multiple devices in a system.

Consequently, compute-intensive workloads require a connection with the quicker data flow to effectively scale heterogeneous computing in the data center.

The Compute Express Link (CXL) uses the electrical and physical interface of PCI Express 5.0 to overcome various restrictions.

Lower latencies are made possible by the new technology, which also enhances memory capacity and bandwidth.

As accelerators are more frequently employed in conjunction with CPUs to support new applications, it serves as an interface for high-speed communications.

Compute Express Link™ (CXL™) is an industry-supported Cache-Coherent Interconnect for Processors, Memory Expansion, and Accelerators.

What is Compute Express Link

Compute Express Link (CXL), an open industry standard memory connection. It offers fast connectivity between the many forms of memory utilized in modern data centers, including CPUs, TPUs, GPUs, and other processor types.

Intensive workloads for CPUs and purpose-built accelerators are the focus of Compute Express Link, and a new open interconnect standard enables efficient and coherent memory access between a host and a device.

Recently, the Compute Express Link 1.0 specification was released along with the announcement of a consortium to enable this new standard.

Related Read: CXL 3.0 – Everything You Need To Know

To decide how to best leverage and incorporate this new interconnect technology into their designs for AI, machine learning, and cloud computing applications, system-on-chip (SoC) designers need to be aware of some of the significant Compute Express Link features that are described in this article.

Image Credits: Image

CXL uses the physical layer technology of PCIe 5.0 to establish a shared memory space for the host and all connected devices.

When accessing data, a cache-coherent standard ensures that the host CPU and CXL devices view the same thing.

Coherency management is essentially the responsibility of the CPU host, enabling the CPU and device to share resources for improved performance and reduced software stack complexity, which lowers overall device costs.

How does compute express link work

A specification for interconnects called CXL is based on PCIe lanes. It offers a connection between the RAM and the CPU.

Based on PCIe 5.0 and has electrical components, CXL can transfer 32 billion bytes of data per second or up to 128 GB/s utilizing 16 lanes.

The CPU memory space and the memory on associated devices are kept coherent.

Compute Express Link interconnect

The semiconductor industry is undertaking a ground-breaking architectural shift to fundamentally alter the performance, efficiency, and cost of data centers in response to the exponential explosion of data.

To handle the yottabytes of data produced by AI/ML applications, server architecture—which has mostly stayed unchanged for decades—is now making a revolutionary leap.

A disaggregated “pooling” paradigm that intelligently matches resources and workloads is replacing the concept in which each server has specialized processing and memory and networking hardware and accelerators.

Compute Express Link specification

The CXL Specification 1.0 based on PCIe 5.0 was released on March 11, 2019.

A coherent cache protocol enables the host CPU to access shared memory on accelerator devices. In June 2019, the CXL Specification 1.1 was released.

The CXL Specification 2.0 was issued on November 10, 2020.

The updated version implements device integrity and data encryption as well as CXL switching, which enables connecting multiple CXL 1. x and 2.0 devices to a CXL 2.0 host processor and pooling each device to multiple host processors in distributed shared memory and disaggregated storage configurations.

Because CXL 2.0 continues to use PCIe 5.0 PHY, there is no bandwidth gain from CXL 1. x.

The PCIe 6.0 PHY-based next edition of CXL standards is anticipated in H1 2022.

Compute Express Link Protocols & Standards

The Compute Express Link (CXL) standard supports a variety of use cases via three protocols: CXL.io, CXL.cache, and CXL.memory.

- CXL.io: This protocol uses PCIe’s widespread industry acceptance and familiarity and is functionally equivalent to the PCIe 5.0 protocol. CXL.io, the fundamental communication protocol, is adaptable and covers a variety of use cases.

- CXL.cache: Accelerators can efficiently access and cache host memory using this protocol, which was created for more specialized applications to achieve optimal performance.

- CXL.memory: Using load/store commands, this protocol enables a host, such as a CPU, to access device-attached memory.

Together, these three protocols make it possible for computer components, such as a CPU host and an AI accelerator, to share memory resources coherently. In essence, this facilitates communication through shared memory, which simplifies programming.

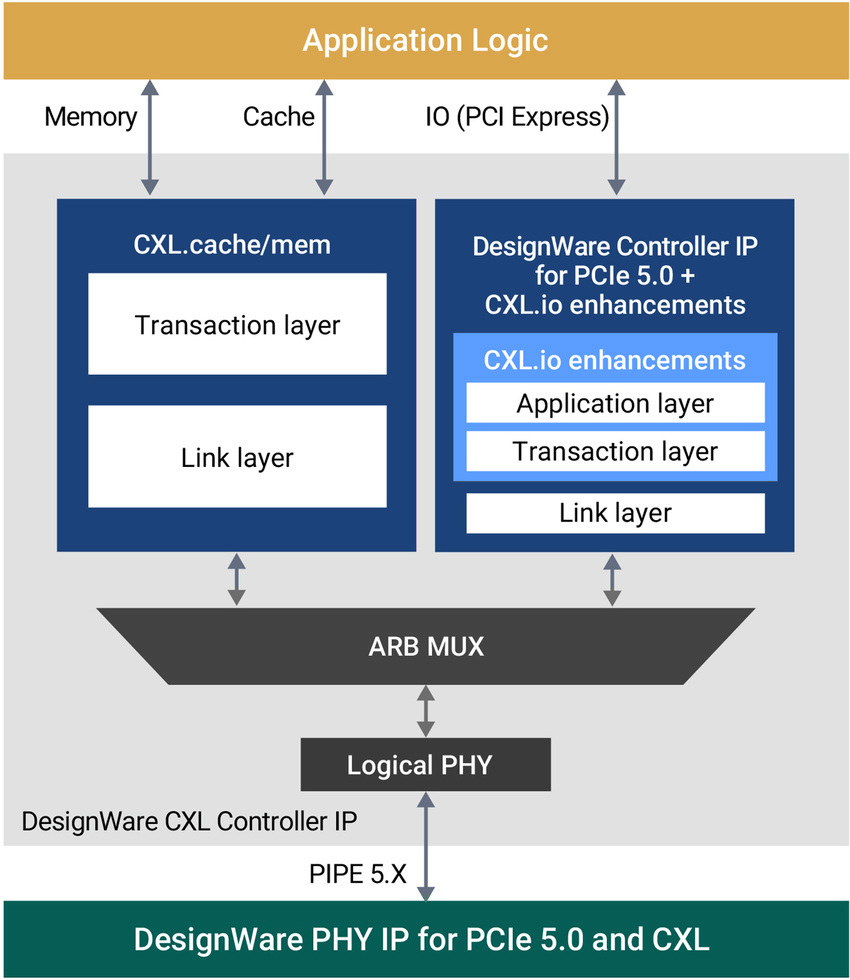

While the CXL.io has its link and transaction layer, the CXL.cache and CXL.mem are combined and share a common link and transaction layer.

Block diagram of a CXL device showing PHY, controller, and application

Image Credits: Image

Before being handed over to the PCIe 5.0 PHY for transmission at 32GT/s, the data from each of the three protocols is dynamically multiplexed together by the Arbitration and Multiplexing (ARB/MUX) block.

The ARB/MUX uses weighted round-robin arbitration with weights determined by the Host to arbitrate between requests from the CXL link layers (CXL.io and CXL.cache/mem) and multiplexes the Flit data based on the arbitration results.

The ARB/MUX also manages link-layer requests for power state transitions, resulting in a single request to the physical layer for a smooth power-down process.

Slots can be allocated to the CXL.cache or CXL.mem protocols and are defined in various formats.

The flit header specifies the slot formats and conveys the data necessary for the transaction layer to route data to the correct protocols.

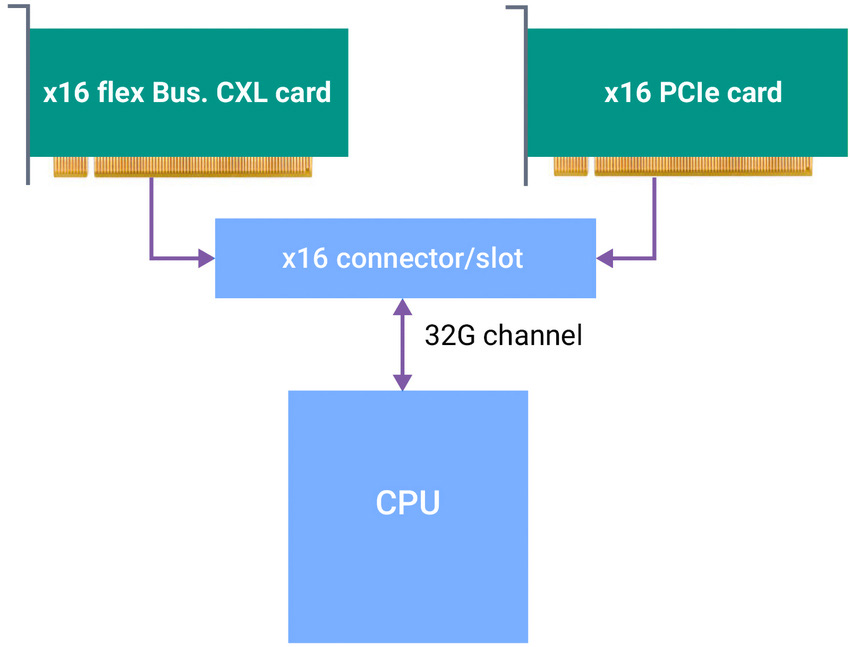

CXL can effectively connect to a system anywhere PCIe 5.0 could be utilized via Flex Bus. This flexible high-speed connection can be statically configured to handle either PCIe or CXL, an illustration of the Flex Bus link.

The flex Bus. Link supports Native PCIe and CXL Cards.

Image Credits: image

CXL Vs. PCIe 5

CXL 2.0 adds protocols to PCIe 5.0’s physical and electrical interfaces that create coherency, streamline the software stack, and preserve compatibility with current standards.

To be more precise, CXL uses a PCIe 5 feature that permits the usage of the physical PCIe layer by alternative protocols.

The default PCI Express 1.0 transfer speeds (2.5 GT/s) are used when a CXL-enabled accelerator is plugged into an x16 slot to communicate with the host processor’s port.

Transaction protocols for Compute Express Link can only be used if both parties are CXL-compatible. They function as PCIe devices otherwise.

The alignment of CXL and PCIe 5 allows both device classes to transfer data at 32 GT/s (giga transfers per second), or up to 64 GB/s in each direction over a 16-lane link, according to Chris Angelini of VentureBeat.

Angelini also points out that the performance requirements of CXL probably drive the adoption of PCIe 6.0.

CXL Features and Benefits

The performance and efficiency of computing are significantly increased while TCO is decreased by streamlining and enhancing low-latency networking and memory coherency.

Beyond the constrained DIMM slots in today’s servers, CXL memory extension options allow more capacity and bandwidth.

Through a CXL-attached device, CXL enables the addition of extra memory to a CPU host processor.

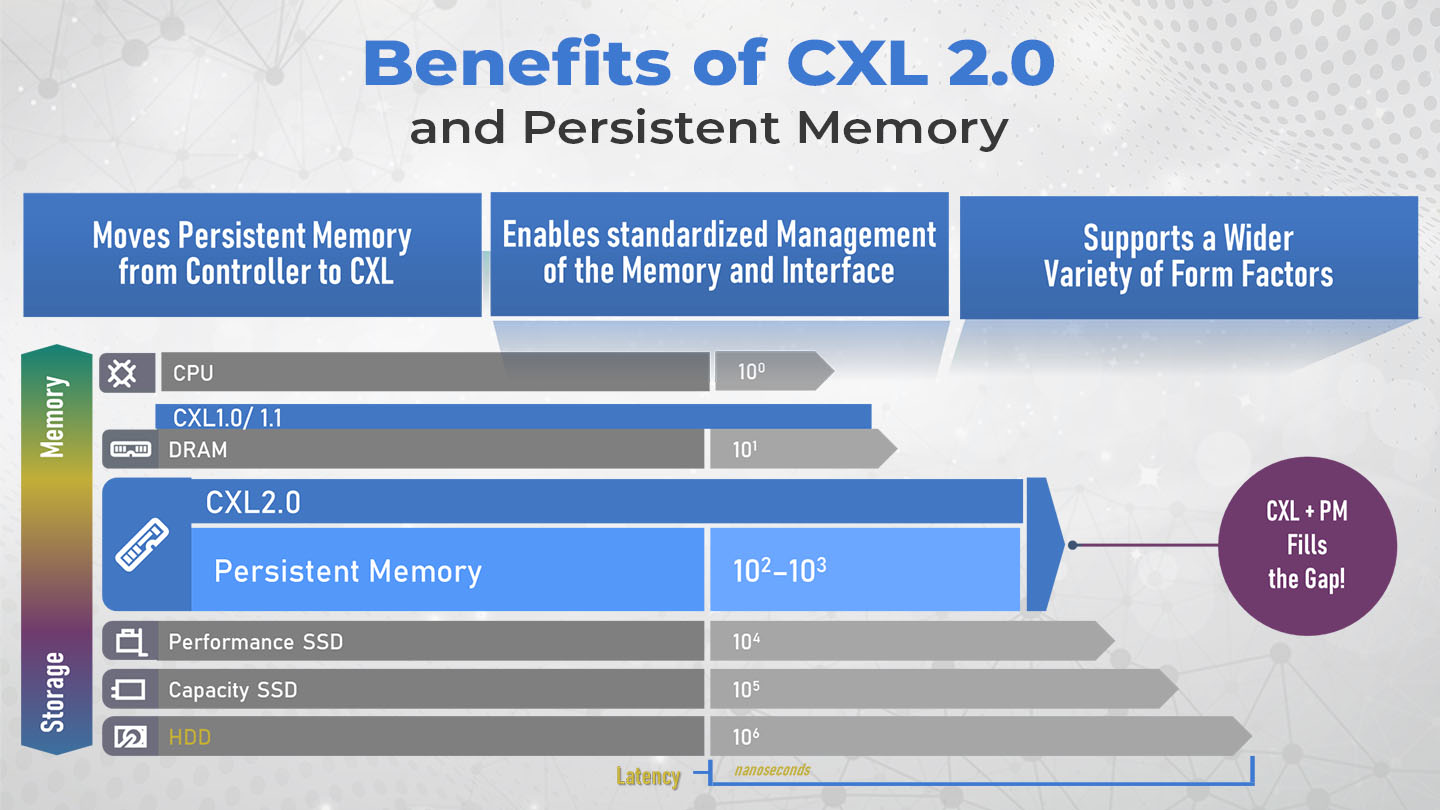

The low-latency CXL link enables the CPU host to use this additional memory in addition to DRAM memory when combined with persistent memory. Suitable memory capacity workloads, like AI, are dependent on high performance.

The benefits of CXL are apparent when you consider the workloads that most companies and data center operators are investing in.

CXL 2.0

CXL has been one of the more intriguing connection standards in recent months.

Built on top of a PCIe physical basis, CXL is a connectivity standard intended to manage considerably more than what PCIe can. In addition to serving as data transmission between hosts and devices, CXL has three more branches to support: IO, Cache, and Memory.

These three constitute the core of a novel method of connecting a host with a device, as defined in the CXL 1.0 and 1.1 standards. The updated CXL 2.0 standard advances it.

There are no bandwidth or latency upgrades in CXL 2.0 because it is still based on the same PCIe 5.0 physical standard, but it does include certain much-needed PCIe-specific features that users are accustomed to.

The same CXL.io, CXL.cache, and CXL.memory intrinsics—which deal with how data is handled and in what context—are at the heart of CXL 2.0; however, switching capabilities, more encryption, and support for permanent memory have been introduced.

Image Credits: Image

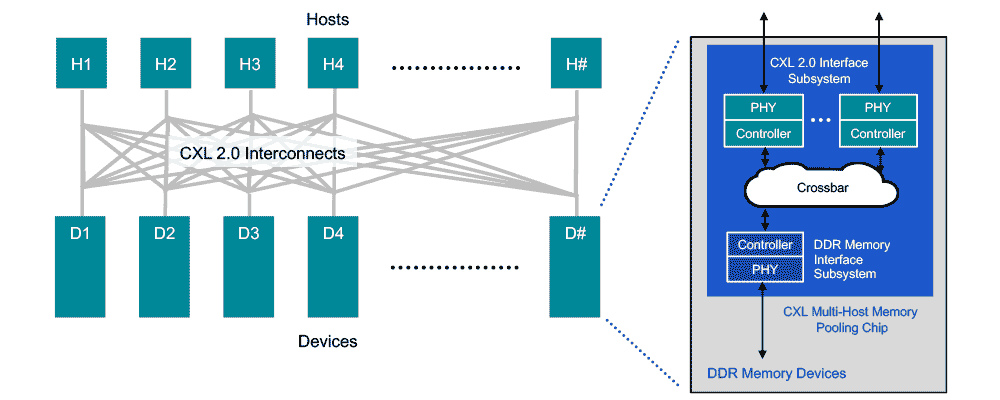

Memory Pooling:

Switching is supported by CXL 2.0 to enable memory pooling. A host can access one or more devices from the pool via a CXL 2.0 switch.

Although the hosts must support CXL 2.0 to take advantage of this feature, a combination of CXL 1.0, 1.1, and 2.0-enabled hardware can be used in the memory devices. A device can only function as a single logical device accessible by one host at a time at version 1.0/1.1.

However, a 2.0-level device can be divided into numerous logical devices, enabling up to 16 hosts to simultaneously access various parts of the memory.

To precisely match the memory needs of its workload to the available capacity in the memory pool, a host 1 (H1), for instance, can use half the memory in device 1 (D1) and a quarter of the memory in device 2 (D2).

A maximum of 16 hosts can use the remaining space in devices D1 and D2 by one or more of the other hosts. Only one host may use Devices D3 and D4, which are CXL 1.0 and 1.1 compatible, respectively.

CXL 2.0 Switching:

Users unaware of PCIe switches should know that they connect to a host processor with a certain number of lanes, such as eight or sixteen lanes, and then support a significant number of additional lanes downstream to enhance the number of supported devices.

A typical PCIe switch, for instance, would have 16x lanes for the CPU connection but 48 PCIe lanes downstream to support six linked GPUs at x8 each.

Although there is an upstream bottleneck, switching is the best option for workloads that depend on GPU-to-GPU transmission, especially on systems with constrained CPU lanes. Compute Express Link 2.0 now supports the switching standard.

CXL 2.0 Persistent Memory:

Persistent memory, nearly as quick as DRAM yet stores data like NAND, is a development in enterprise computing that has occurred in recent years.

It has long been unclear whether such memory would operate as slow high-capacity memory through a DRAM interface or as compact, quick storage through a storage-like interface.

The CXL.memory standard of the original CXL standards did not directly provide persistent memory unless it already had a device connected. But this time, CXL 2.0 offers additional PMEM support due to several gathered resources.

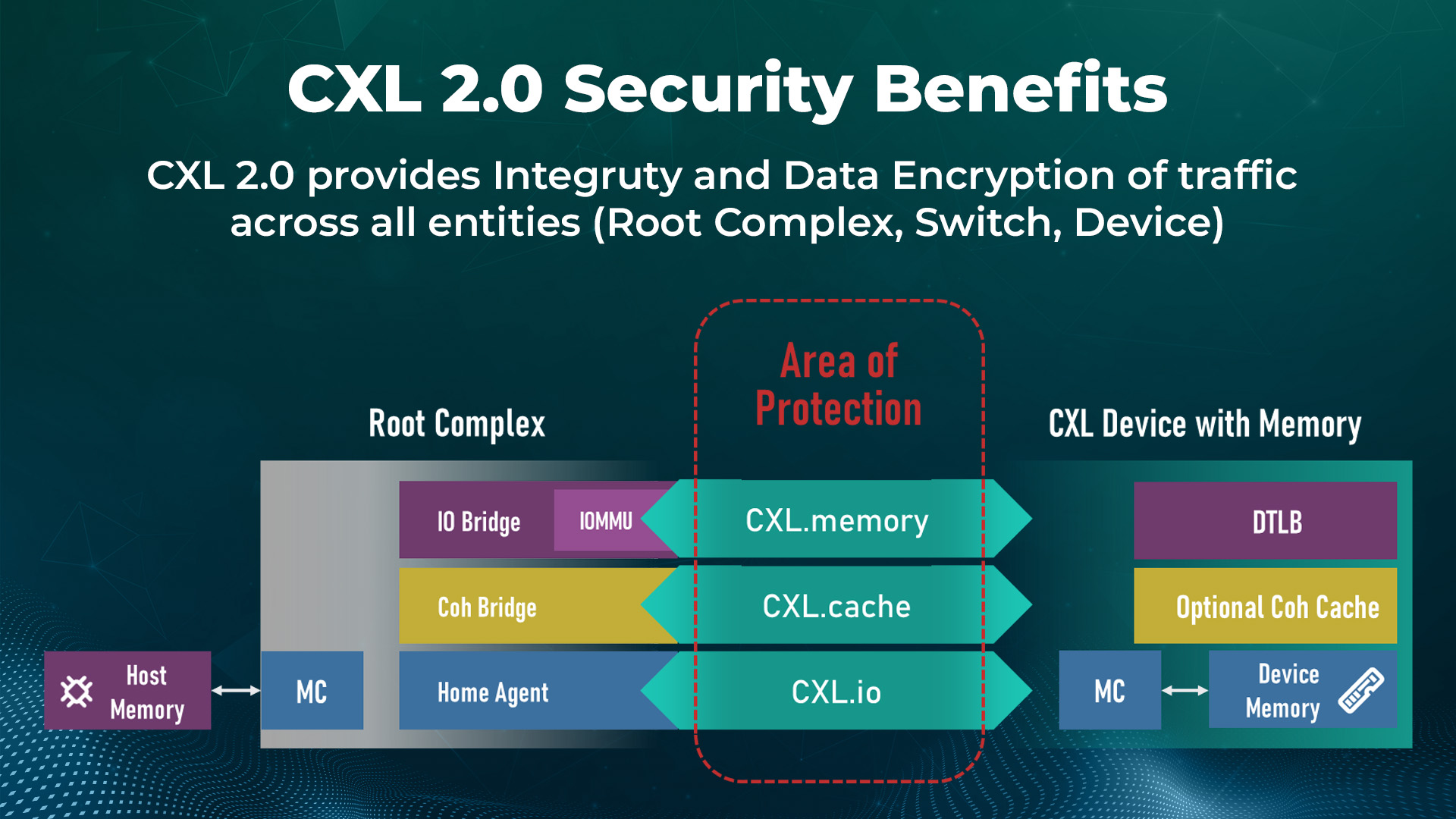

CXL 2.0 Security

Point-to-point security for any CXL link is the final but, in some eyes, most significant feature improvement.

The hardware acceleration present in CXL controllers now permits any-to-any communication encryption, which is supported by the CXL 2.0 standard.

This is an optional component of the standard, meaning that silicon providers do not have to build it in, or if they do, it can be enabled or disabled.

CXL 2.0 specification

With full backward compatibility with CXL 1.1 and 1.0, the CXL 2.0 Specification 1.1 preserves industry investments while adding support for switching for fan-out to connect to more devices, memory pooling to increase memory utilization efficiency, and providing memory capacity on demand, and support for persistent memory.

Key Highlights of the CXL 2.0 Specification:

- Adds support for switching to enable resource migration, memory scaling, and device fanout.

- Support for memory pooling is provided to increase memory consumption and reduce or eliminate the need for overprovisioning memory.

- Provides confidentiality, integrity, and replay protection for data transiting the CXL link by adding link-level Integrity and Data Encryption (CXL IDE).

CXL Consortium

A brand-new, high-speed CPU-to-device and CPU-to-memory interconnect called Compute Express LinkTM (CXLTM) was created to boost the performance of next-generation data centers.

Incorporated in Q3 of 2019, the CXL Consortium was established in early 2019. By maintaining memory coherency between the memory of associated devices and the CPU, CXL technology enables resource sharing for improved performance, a more straightforward software stack, and cheaper system costs.

Thanks to this, users may now simply concentrate on their intended workloads rather than the redundant memory management hardware in their accelerators.

The CXL Consortium is an open industry standard organization created to create technical specifications that promote an open ecosystem for data center accelerators and other high-speed improvements while enabling breakthrough performance for new usage models.

How LFT can help with CXL

LFT helps CXL in various ways, some of which are listed below:

- LFT has a proven track record on PCIe/CXL Physical Layer and thus, can provide support in the Physical layer.

- LFT can provide support in architecting the various solutions of CXL.

- LFT will release a CXL IP during the first quarter of 2023.

Conclusion

The usage of many protocols in large systems with memory coherency to support CPU-to-CPU, CPU-to-attached-Device, and longer distance chassis-to-chassis requirements is conceivable.

At the moment, CXL is concentrating on offering a server-optimized solution.

Due to its inherent asymmetry, it might not be the best for CPU-to-CPU or accelerator-to-accelerator connections.

An alternate transport might be more appropriate to enhance performance for rack-to-rack setups due to its reliance on PCI 5.0 PHYs.

Since CXL and PCIe 5.0 are closely related, we anticipate that CXL-compatible products will be released simultaneously as PCIe 5.0.

According to an editorial published on March 11, 2019, Intel “plans to release devices that utilize CXL technology starting in Intel’s 2021 data center platforms, including Intel® Xeon® processors, FPGAs, GPUs, and SmartNICs.”2

To aid in adopting the standard, the CXL consortium has acknowledged the necessity to establish some type of interoperability and compliance program.

As a result, a few minor changes will be made to the specification to meet this requirement, and eventually, it seems likely that a compliance program will be implemented.

The Compute Express Link standard, which appears to be gaining ground quickly, has advantages for devices that must effectively process data while coherently sharing memory with a host CPU.

The Compute Express Link consortium has 75 members as of this article and is continually expanding. With Intel, a large CPU manufacturer, supporting the standard and introducing Compute Express Link-capable devices in 2021, it appears likely that there will be widespread industry acceptance.