Artificial intelligence (AI) is the term used to describe non-human, machine intelligence that can make judgments similarly to how people do.

Contemplation, adaptation, intention faculties, and judgment fall under this category. Applications in the AI market include robotic automation, computer vision, cognitive computing, machine learning, and robotic automation.

Many significant market participants from the EDA (Electronic Design Automation), graphics cards, gaming, Social Media and multimedia sectors are investing to supply cutting-edge and quick computing processors as the demand for machine learning devices rises globally.

Related Read: FPGA Design: An Ultimate Guide for FPGA Enthusiasts

While software algorithms that simulate human cognition and reasoning make up most of the artificial intelligence, hardware is also a key element.

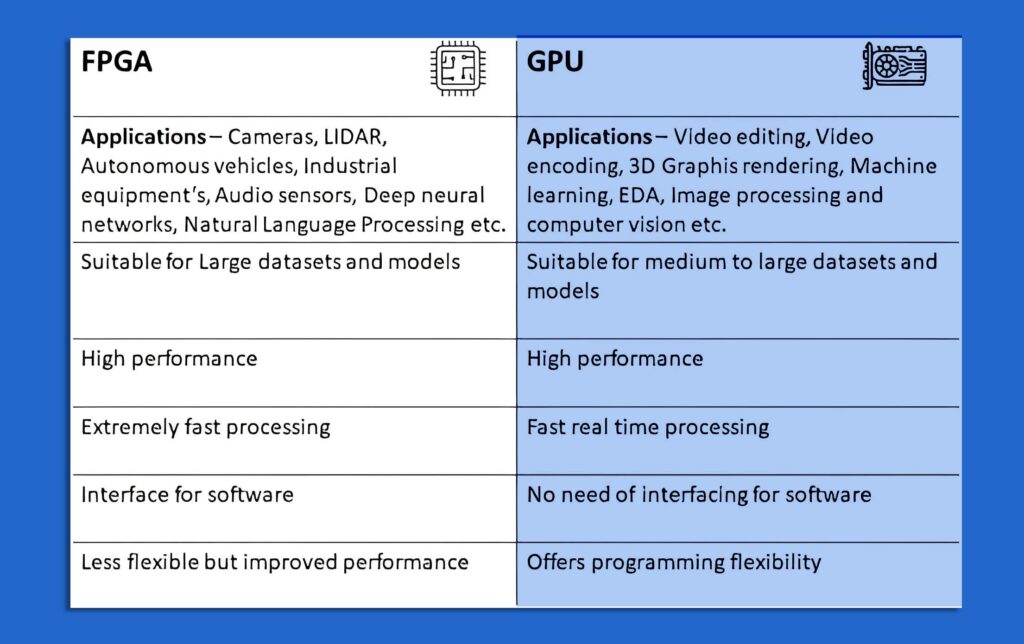

To power AI-based applications, Field-Programmable Gate Arrays (FPGAs) and Graphics Processing Units (GPUs) are essential. While GPUs are specialized processors made for high-performance computing, FPGAs are programmable integrated circuits that offer flexibility and parallel processing capabilities.

Due to their capacity to handle complex algorithms, expedite data processing, and carry out parallel operations, FPGAs and GPUs have emerged as crucial elements in AI applications.

The choice of FPGAs and GPUs for AI-based systems, including their capabilities, factors to consider, and use cases, will be discussed in this blog.

FPGA and GPU

FPGA (Field-Programmable Gate Array):

A reconfigurable hardware device allows users to program and configure its internal logic gates and interconnections to implement custom digital circuits.

GPU (Graphics Processing Unit):

A specialized electronic circuit designed to accelerate image and video processing for display output in computers and gaming consoles, with a large number of cores optimized for parallel computation.

Compared to CPUs and GPUs, FPGAs are more energy-efficient and better suited for embedded applications. These circuits can be utilized with custom data types because they are not limited by design like GPUs are.

Related Read: FPGA VS ASIC Design [Comparison]

Furthermore, due to their programmability, FPGAs are easier to alter to solve security and safety concerns.

Benefits of FPGAs in AI-based Applications

FPGAs offer several advantages for AI-based applications:

1. Parallel Processing Capabilities:

FPGAs are excellent at parallel processing, making it possible to carry out several operations at once. For AI algorithms, which frequently require intensive matrix operations and neural network computations, this parallelism is quite advantageous.

2. Low Latency and High Throughput:

Due to their ability to directly implement hardware circuits, FPGAs can provide low-latency and high-throughput performance. As a result, they are appropriate for real-time AI applications like autonomous driving or video analytics where prompt responses are essential.

3. Flexibility and Re-programmability:

FPGAs have many reprogramming capabilities that let designers modify their hardware designs in response to shifting specifications. This adaptability is advantageous in the quickly changing field of artificial intelligence, where algorithms and models often go through upgrades and improvements.

Market Growth of FPGA in 2023

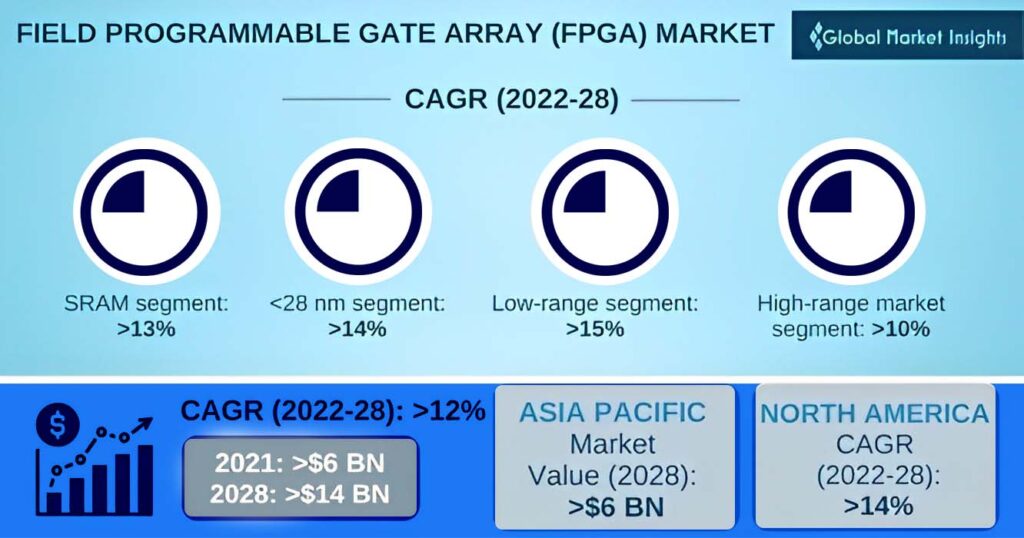

Global Market Insights estimates that the size of the FPGA market topped USD 6 billion in 2021 and is expected to increase at a CAGR of more than 12% from 2022 to 2028. The industry is expanding because data centers increasingly using artificial intelligence (AI) and machine learning (ML) technology for edge computing applications.

Overview of Graphics processing unit (GPU)

Graphics processing units (GPUs) were initially designed to produce geometric objects in computer graphics and virtual reality settings, which rely on intricate computations and floating-point technology. Without them, deep learning techniques would not work properly, and the architecture of modern AI would be insufficient.

To successfully study and learn, artificial intelligence (AI) needs a lot of data. It takes a large amount of computational power to run AI algorithms and transfer a lot of data.

Due to their design as fast data processors required for producing graphics and video, GPUs can carry out these jobs. One reason for their broad use in machine learning and AI applications is their powerful computing power.

GPUs are capable of handling numerous calculations at once. As a result, machine learning activities can be substantially accelerated by the distribution of programs for training. Multiple low-resource kernels can be added to GPUs without degrading performance or increasing power usage.

There are many kinds of GPUs available; they are often divided into three categories: enterprise-grade, consumer-grade, and GPUs for data centers.

Benefits of GPUs in AI-based Applications

GPUs offer several advantages for AI-based applications:

1. Memory Bandwidth

GPUs can process calculations quickly in deep learning applications because of their outstanding memory bandwidth. GPUs use less memory when training models on huge datasets. They are capable of significantly accelerating the quick processing of AI algorithms with up to 750GB of memory bandwidth.

2. Multi-Core:

GPUs are typically made up of numerous combinable processing clusters. This significantly boosts the system’s processing capabilities, especially for AI applications that require concurrent data input, convolutional neural networks (CNN), and training for ML algorithms.

3. Flexibility:

The parallel capabilities of GPUs allow you to organize them into clusters and distribute jobs among them. Using a single GPU with a dedicated cluster to train a particular algorithm is an additional choice. High data throughput GPUs can parallelize a single operation across a few data points, allowing them to process massive volumes of data at previously unheard-of speeds.

4. Dataset Size

To effectively handle data sets with numerous data points larger than 100GB, which are needed for model training in AI algorithms that demand memory-intensive computations, GPU is one of the best solutions. They have offered the sheer computing power required for the effective processing of the same or unstructured data ever since parallel processing first emerged.

Market Growth of GPU in 2023

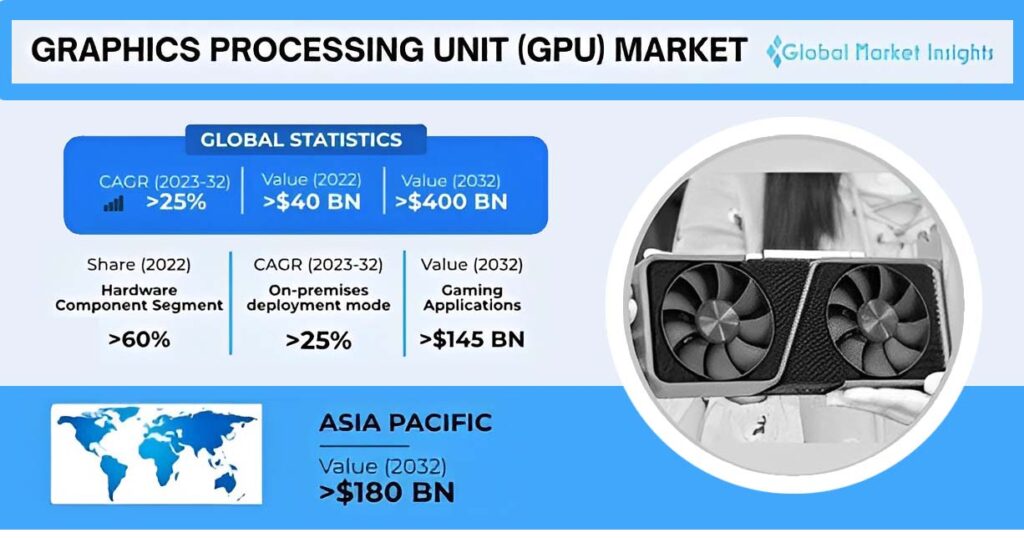

Global Market Insights estimates that the GPU market will reach over USD 40 billion in 2022 and will grow at a rate of over 25% between 2023 and 2032. Big data technology’s quick uptake is anticipated to support the industry’s progress.

Comparison of FPGAs and GPUs for AI-Based Applications

A. Performance comparison

1. FPGAs:

- FPGAs offer highly parallel and configurable architectures that can be customized for specific AI workloads.

- They excel at performing concurrent computations and can achieve low-latency processing.

- FPGAs are well-suited for applications requiring real-time inference or high-speed data processing.

2. GPUs:

- GPUs are designed with many cores optimized for parallel processing.

- They excel at handling massive amounts of data in parallel, making them ideal for training deep learning models.

- GPUs are highly efficient in performing matrix operations, commonly used in AI algorithms.

B. Power Consumption Comparison

1. FPGAs:

- FPGAs are known for their power efficiency due to their ability to implement custom hardware configurations.

- They can achieve high performance while consuming relatively low power, making them suitable for energy-constrained applications.

2. GPUs:

- GPUs are powerful processors that typically consume higher power compared to FPGAs.

- They are designed to deliver high-performance computing and may require additional cooling mechanisms.

C. Development and Programming Considerations

1. FPGAs:

- Development for FPGAs requires hardware description languages (HDL) like VHDL or Verilog.

- It demands a deeper understanding of the hardware design process and requires expertise in FPGA programming.

- However, FPGA development tools and frameworks, such as Intel’s OpenVino or Xilinx’s Vitis, are evolving to provide higher-level programming abstractions.

2. GPUs:

- GPUs have established software ecosystems with robust libraries and frameworks, such as TensorFlow and PyTorch, making AI development more accessible.

- AI frameworks provide high-level APIs that abstract away many complexities, allowing developers to focus on algorithm design and implementation.

D. Cost Considerations

1. FPGAs:

- FPGAs generally have a higher upfront cost due to their specialized hardware.

- Customization and optimization of FPGA designs may require additional development and engineering resources.

- However, for specific use cases that benefit from FPGA’s unique capabilities, the performance gains may justify the investment.

2. GPUs:

- GPUs have a lower upfront cost compared to FPGAs and are more readily available in the market.

- They offer a cost-effective solution for a wide range of AI applications, especially deep learning tasks.

E. Use cases suitable for FPGAs:

- Real-time video processing and analytics

- High-frequency trading and financial data analysis

- Edge computing applications with strict latency requirements

- Hardware-accelerated AI inference in resource-constrained environments

F. Use cases suitable for GPUs:

- Deep learning model training

- Image and video recognition

- Natural language processing and text analytics

- Large-scale data processing and analytics

Conclusion:

Both FPGAs and GPUs are essential for accelerating calculations and producing high-performance outcomes in the world of AI-based applications.

FPGAs are ideal for real-time inference and data processing jobs due to their parallel processing, low latency, and configurable features.

On the other hand, GPUs excel at huge parallelism and are particularly useful for managing computationally demanding workloads and deep learning model training.

Developers can take advantage of the flexibility and low latency capabilities of FPGA for preprocessing and acceleration activities while GPUs handle parallel compute-intensive workloads by combining the characteristics of both technologies. This strategy has the potential to improve AI applications’ performance and effectiveness.

In conclusion, performance, power consumption, development needs, and cost must all be carefully considered when choosing FPGAs and GPUs for AI-based applications.

To fully utilize hardware acceleration in AI, developers, and researchers must have a thorough understanding of the advantages and disadvantages of each technology.

![Advanced Driver Assistance System [ADAS] Everything You Needs to Know](https://www.logic-fruit.com/wp-content/uploads/2022/10/Advanced-driver-assistance-systems-Thumbnail.jpg)