Real-Time AI: Artificial Intelligence is accelerating rapid change for industries by creating smarter, more autonomous systems.

As embedded AI becomes integrated into more and more devices, we are entering a new age of innovation.

Embedded AI takes fixed-function hardware and reimagines it as a smart platform with capabilities to process data locally, make decisions in real-time,

and learns as environments change. It is generating a quantum leap in areas such as the IoT, robotics, automation, etc., providing speed, efficiency, and cost reductions.

According to the Grand view research, the size of the worldwide artificial intelligence market was estimated at USD 279.22 billion in 2024 and is expected to increase at a compound annual growth rate (CAGR) of 35.9% from 2025 to 2030, reaching USD 1,811.75 billion.

Embedded vision, which combines artificial intelligence (AI) and small camera systems, is central to this transformation.

Like traditional vision systems, embedded vision systems use these vision devices to analyze and derive meanings from visual information.

The key difference is that embedded vision devices provide analysis and interpretations on-device with very low latency time.

Since they can analyze and react to their surroundings immediately, they enable machines to react in real-time.

This capability is extremely useful in real-world applications, including, but not limited to, autonomous vehicles, security surveillance, industrial automation, and healthcare, where reliable visual analysis needs to be processed in real-time.

According to precedence research, the size of the global embedded systems market is predicted to be USD 178.15 billion in 2024, USD 186.65 billion in 2025, and USD 283.90 billion in 2034, growing at a compound annual growth rate (CAGR) of 4.77% as the market matures.

This blog will examine how Logic Fruit’s AI/ML-driven solutions solve some of the most difficult problems of our day by providing sophisticated real-time vision capabilities tailored for embedded platforms.

What is Real-Time Embedded AI?

Artificial Intelligence (AI) integration is revolutionary because it combines the speed and efficiency of embedded devices with the power of AI learning and decision-making.

Artificial Intelligence (AI) gives embedded systems the ability to make decisions in real time, adjust to changing circumstances, and gradually get better.

Artificial intelligence (AI) in embedded systems and applications refers to the incorporation of deep learning into hardware and software.

The purpose of embedded systems is to carry out specific tasks within of bigger devices or systems. Such systems can evaluate data, make judgments, and carry out activities more accurately, autonomously, and efficiently when AI is incorporated.

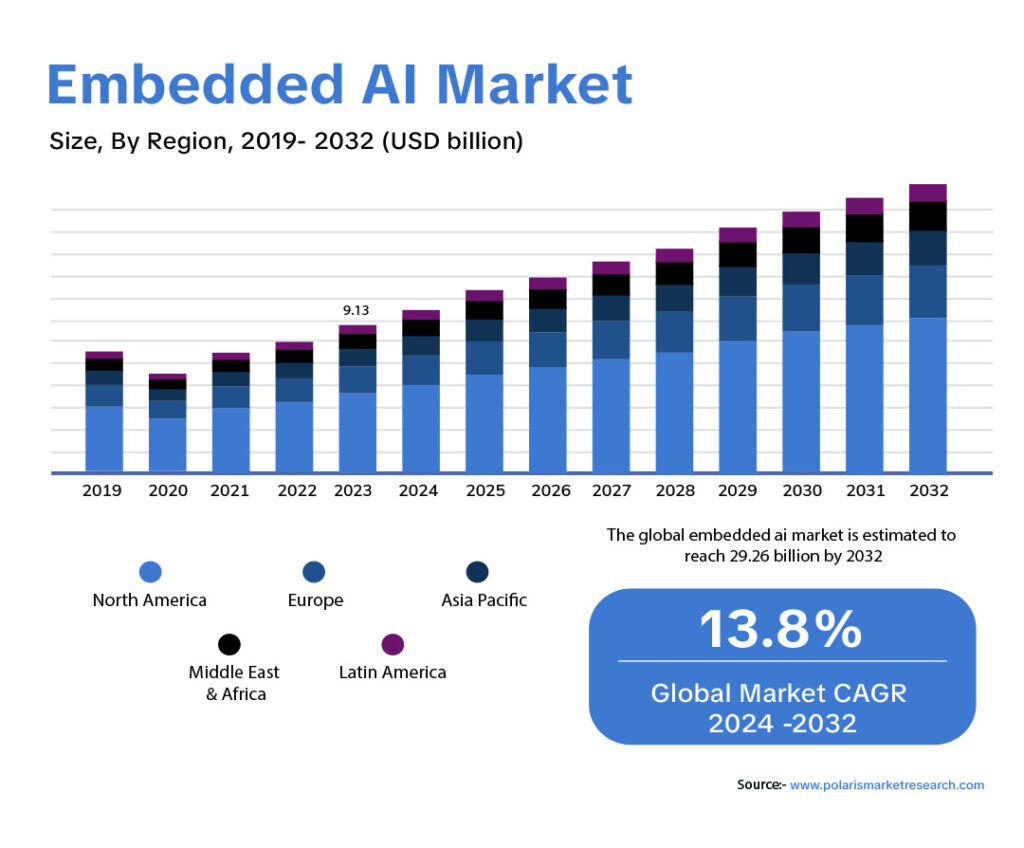

According to Polaris Market Research, the embedded AI market is expected to develop at a compound annual growth rate (CAGR) of 13.8% from 2024 to 2032, from a 2023 valuation of USD 9.13 billion to USD 10.37 billion in 2024.

The term “embedded artificial intelligence” (AI) describes the direct integration of AI capabilities into networked devices and systems, allowing them to carry out intricate activities without constant internet connectivity.

This technology is becoming more and more significant in a number of domains, such as robotics, industrial applications, and the Internet of Things (IoT).

Real-World Applications – Logic Fruit’s AI/ML Demos

Logic Fruit Technologies provides cutting-edge AI-ML and Vision solutions and development services that enable intelligence at source for making decisions in real-time.

The company specializes in creating solutions based on heterogeneous hardware that leverage the power of FPGAs and GPUs.

Utilizing Edge AI hardware platforms to accelerate AI/ML and vision models, algorithms, and applications is our area of expertise at Logic Fruit Technologies.

In the section below, we show how computer vision driven by AI and ML can solve practical problems. Discover how our intelligent systems are accurately changing commonplace situations in real time, from traffic detection to collision avoidance.

Aerial Object Detection and Tracking

This demo highlights how AI can boost real-time airspace monitoring. Using live camera feeds, the system detects and classifies aerial objects like drones, commercial planes, and military aircraft.

It’s built with Python, OpenCV, and YOLO models, running on the powerful NVIDIA ORIN AGX platform. The system is designed to work in real-time and can handle multiple flying objects at once.

Key Highlights:

- Real-Time Detection: Instantly spots aircraft within the camera feed.

- Classification: Tells apart different types of aircraft.

- Robust Performance: Works well even in different lighting and weather.

- Scalable: Handles multiple aerial objects at the same time.

The result? A reliable, fast, and accurate detection system that can support various security and surveillance needs.

Lane Curvature Detection & Departure

Ensuring lane discipline is a key part of road safety, especially in modern driver-assist systems.

This solution uses real-time video analysis to detect road lanes, measure their curvature, and monitor the vehicle’s position to prevent unintentional lane departures.

Developed using Python, OpenCV, edge detection, and perspective transformation, the system runs efficiently on the NVIDIA ORIN AGX platform for on-vehicle deployment.

Key Highlights:

- Lane Detection: Accurately identifies lane markings across different road types.

- Curvature Calculation: Understands road geometry to detect sharp bends.

- Position Awareness: Tracks the vehicle’s placement within the lane.

- Lane Keeping Support: Assists with maintaining proper lane alignment.

- Departure Alerts: Instantly notifies if the vehicle drifts unintentionally.

This implementation enhances driver awareness, supports active safety systems, and contributes to reducing lane-drift-related accidents.

License Plate Detection with Real-Time OCR

This system detects vehicle license plates and reads their characters instantly from live video feeds. Designed for real-time performance, it delivers accurate results with minimal delay.

Built using Python, OpenCV, YOLO models, and OCR techniques, it runs on the NVIDIA ORIN AGX platform to support fast and efficient processing.

Key Highlights:

- High Detection Accuracy: Precisely locates license plates on vehicles in real-time.

- Reliable Recognition: Accurately reads and interprets plate characters.

- Real-Time Performance: Processes video frames with low latency.

- Multi-Plate Detection: Handles multiple license plates within a single frame.

This solution ensures efficient and accurate license plate recognition, contributing to effective vehicle monitoring and traffic management.

Traffic Signal and Sign Detection

This system detects and interprets traffic signals and signs in real-time to enhance road safety and optimize traffic flow.

Developed using Python3, OpenCV, YOLO models, and computer vision techniques, it runs on the NVIDIA ORIN AGX platform to ensure fast and reliable processing.

Key Highlights:

- Accurate Detection: Detects traffic signals and signs in various environmental conditions, including different lighting, weather, and traffic densities.

- Real-Time Processing: Ensures timely detection and interpretation for rapid response by the ADAS system.

- Localization and Classification: Identifies the exact location, type, and relevance of detected traffic signals and signs.

- Robustness: Handles occlusions, obstructions, and variations in placement and orientation.

The result is enhanced driver safety through accurate and real-time detection, promoting compliance with traffic regulations and smoother navigation.

Collision Avoidance with Real-Time Ranging

This system detects vehicles and predicts potential collisions using real-time data, ensuring reliable collision avoidance in diverse driving conditions.

It also calculates the distance between approaching and departing vehicles.

Developed using Python3, OpenCV, YOLO models, object detection, and computer vision, it runs on the NVIDIA ORIN AGX platform.

Key Highlights:

- Enhanced Safety: Reduces the risk of collisions by detecting vehicles and providing timely warnings or automated intervention.

- Real-time Vehicle Detection: Detects vehicles accurately in real-time from video using object detection or computer vision.

- Distance Calculation: Calculates the distance between moving vehicles accurately.

- Threshold-based Alert System: Triggers warnings when the distance falls below a predefined safety threshold.

The result is real-time distance calculation and alert generation to prevent collisions, enhancing road safety and reducing accident rates.

Vehicle & Pedestrian Detection

This solution identifies and classifies vehicles and pedestrians in real-time from traffic camera footage.

It supports traffic management, enhances safety, and aids autonomous vehicle operations.

Developed using Python3, OpenCV, YOLO models, computer vision, and object detection, it runs on the NVIDIA ORIN AGX platform.

Key Highlights:

- Accurate Vehicle Detection: Detects vehicles across various environments and conditions.

- Low Latency: Enables near real-time decision-making for autonomous vehicles and traffic flow.

- Accurate Detection: Performs reliably under different lighting, weather, and road conditions.

- Real-Time Processing: Handles video feeds instantly with minimal delay.

The result is an ADAS-enabled approach that improves driver awareness, reduces collision risks, and enhances overall road safety.

Face Detection and Recognition

This solution detects and recognizes faces in real-time from live video feeds, making it easier to unlock devices, access services, and enhance safety in public spaces.

Built with Python3, OpenCV, MobileNet, and computer vision techniques, it operates on the NVIDIA ORIN AGX platform.

Key Highlights:

- Real-time Detection: Swiftly and accurately detects faces with minimal latency.

- High Accuracy: Uses advanced algorithms and machine learning to reduce false positives and negatives.

- User Privacy and Security: Maintains privacy and complies with data protection regulations.

- Scalability and Efficiency: Handles multiple faces simultaneously and runs efficiently on various hardware.

The result is a reliable face detection and recognition approach that improves security while ensuring a seamless user experience.

The Embedded Advantage: Why Not Cloud?

For sensitive situations and real-time applications, embedded systems offer significant advantages over cloud computing, which offers robust processing and storage capabilities.

By processing data locally without relying on internet connectivity, embedded platforms, such as those built on the NVIDIA ORIN AGX platform, offer low latency and excellent reliability.

For mission-critical jobs, where delays or outages could have major repercussions, this is essential.

Furthermore, by storing sensitive data on-device rather than sending it across networks, embedded solutions guarantee increased data security and privacy.

Additionally, they offer consistent performance even in remote or bandwidth-constrained areas and lower bandwidth requirements.

In summary, many real-time AI and vision applications choose embedded systems over cloud solutions because they are the best option when operational independence, data security, and quick reaction are top concerns.

How Logic Fruit Enables Real-Time AI

Building effective and affordable AI/ML and vision solutions that provide great performance with fewer resources is Logic Fruit’s area of expertise.

By utilizing specialized hardware and in-depth architecture knowledge, we expedite development and deployment across a variety of edge platforms.

- Efficient Hardware: We offer EHPC hardware in multiple form factors such as PCIe and VPX, based on Xilinx and Intel FPGAs, x86 and ARM CPUs, as well as NVIDIA and AMD GPUs.

- Faster Development: Using Edge-AI building blocks and expertise, we reduce development time by at least 30%.

- Wide Processor Support: Our experience spans processors from TI, Nvidia, Xilinx, Intel, Lattice, and others.

- Complete Environment: We provide a full development and verification environment for seamless project execution.

- Performance Acceleration: AI-ML and vision-specific edge-AI building blocks, including hard and soft IPs, enhance system performance.

Conclusion

Smart vision is being revolutionized by real-time AI on embedded systems, which provide secure, dependable, and low-latency processing right at the edge.

This method ensures quick decision-making and improved privacy by overcoming the drawbacks of cloud dependency.

A vast array of applications, from security surveillance to driverless cars, where prompt and precise vision skills are essential, are made possible by embedded systems’ increasing strength and efficiency.

Using scalable programming frameworks designed for embedded contexts, specialized hardware, and optimized AI models will be key components of smart vision in the future.

Real-time vision will become smarter, faster, and more accessible than ever before because of embedded AI systems’ emphasis on resource-efficient solutions and seamless integration, which will continue to spur innovation across industries.